Davide Pasca’s programmer portfolio

Programming represents the pinnacle of exploration and creativity for me. I’m deeply involved in R&D, constantly pursuing innovative ideas and technologies. In the years I also found significant satisfaction in developing impactful software, particularly in the realms of gaming and interactive media, used and enjoyed by millions worldwide.

My native language is Italian 🇮🇹, I’m fluent in English 🇺🇸, decent in Japanese 🇯🇵, and I can catch some words in Russian 🇷🇺 and Chinese 🇨🇳.

Career

My current focus lies in AI Research & Development, working both at the low level with C++, Python, and PyTorch, and at the higher level with LLMs, OpenAI’s API and agent-based architectures.

I’ve successfully applied AI / ML to financial market forecasting (ENZO-TS), autopilot for airframes (XPSVR) and, more recently, virtual assistants and agents (ChatAI) running on modern LLMs (code available on GitHub)

Prior to AI, I dedicated over two decades to game development and real-time 3D graphics, gaining experience in major gaming corporations as well as spearheading projects at my own development studio.

A programmer in 2024 ?

I’m not a terribly nostalgic person, and while I do appreciate how programming used to be, I very much welcomed the benefits recently brought by AI tools such as ChatGPT and Copilot. I’ve always wished for more time and more brain power to explore and implement many ideas that I had, and these tools are a giant leap in that direction.

I believe (hope ?) that as much as an equalizer these AI tools are, they will continue to work as a force multiplier, expecially for those of us that have extensive experience in software engineering.

| Quick Links | |

|---|---|

| 💻 My GitHub Profile | github.com/dpasca |

| 📚 My GitHub Portfolio | dpasca.github.io/portfolio |

| 📈 My Trading System | ENZO-TS |

| 📺 My YouTube Channel | DavidePasca |

| ✍️ My Blog | xpsvr.com |

| 📧 Contact | dpasca@gmail.com |

Skills Summary

- Software Engineer with 30+ Years of Experience

- Performance Optimization: C/C++, assembly

- Fluent in C/C++, Python, JavaScript

- AI / Machine Learning: ANN from ground up, PyTorch, OpenAI API

- Algorithmic Trading: strategy development, backtesting, portfolio management

- Video Game Development: 3D engine, physics, game logic, UI

- Real-Time 3D Graphics: OpenGL, Direct3D, software rendering

- Image Processing & Compression: DCT, Wavelets, Zero-Tree Encoding

- Flight Simulation: flight dynamics, avionics, weapon systems

- Platforms: Windows, Linux, Mac, Web, Mobile, Game Consoles

Leadership & Language Skills

- Management: Capable of running a small business and leading a development team

- Languages: Italian (native), English (fluent), Japanese (conversational)

Emplyoment History

| Start | End | Title | Company | Location |

|---|---|---|---|---|

| 2010/11 | Current | Co-founder and CTO | NEWTYPE K.K. | Tokyo, Japan |

| 2006/11 | 2010/4 | Senior Software Engineer | Square Enix Co., Ltd. | Tokyo, Japan |

| 2001/8 | 2006/9 | Senior Software Engineer | Arika Co., Ltd. | Tokyo, Japan |

| 2000/5 | 2000/12 | Senior Software Engineer | Gama Internet Tech. USA, Inc. | Costa Mesa, CA, USA |

| 1999/3 | 2000/3 | Software Engineer | SquareSoft, Inc. | Costa Mesa, CA, USA |

| 1995/9 | 1998/6 | Senior Programmer | Digital Dialect | West Hills, CA, USA |

| 1990/11 | 1995/8 | Programmer | Tabasoft, s.a.s. | Rome, Italy |

Projects

The following list of projects is limited to major milestones or more recent products. My professional experience started in 1990, however, the first listed project is from 1994, for the sake of brevity.

| Year | Name | Type | Company | |

|---|---|---|---|---|

| 1 | 2024 | AI Research & Development | AI / Machine Learning | NEWTYPE |

| 2 | 2022 | ENZO-TS | Fintech (crypto, trading) | NEWTYPE |

| 3 | 2022 | xComp | Image processing | NEWTYPE |

| 4 | 2022 | 3D for Melarossa app | 3D visualization | NEWTYPE |

| 5 | 2018 | XPSVR | Flight Sim, VR | NEWTYPE |

| 6 | 2012 | Fractal Combat X | Mobile game | OYK Games |

| 7 | 2010 | Final Freeway 2R | Mobile game | OYK Games |

| 8 | 2010 | RibTools renderer | Computer graphics | Self |

| 9 | 2010 | GPGPU engine R&D | Computer graphics | Square Enix |

| 10 | 2005 | Tetris: TGM Ace | Console game | Arika |

| 11 | 2004 | SH-Light 2004 | Computer graphics | Self |

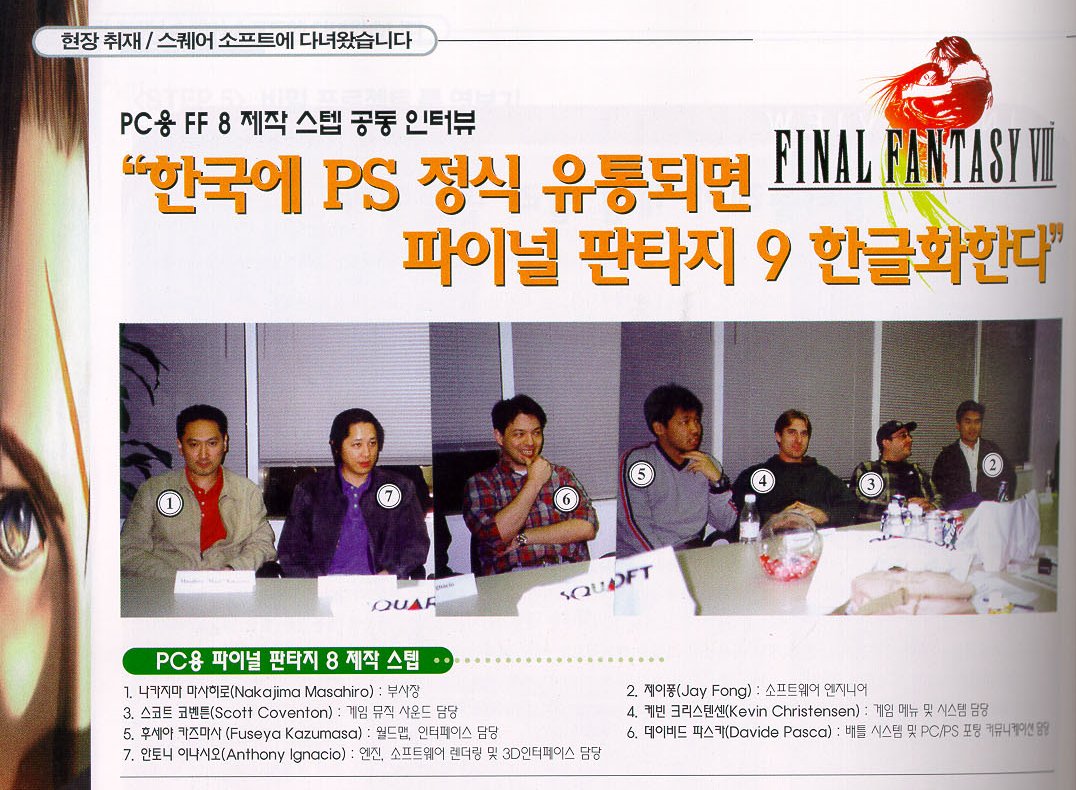

| 12 | 2000 | Final Fantasy VIII | PC game | SquareSoft |

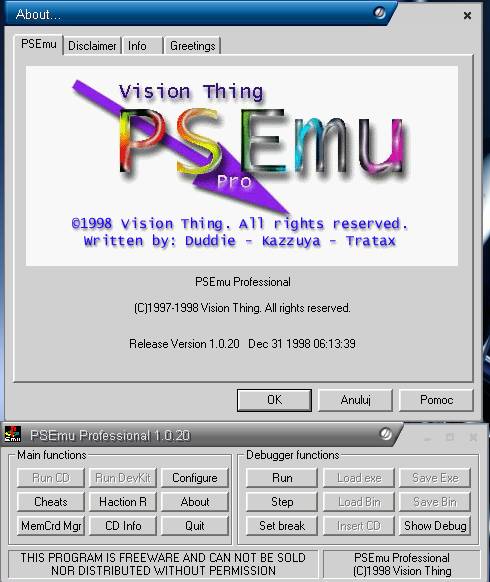

| 13 | 1999 | PSEmu Pro | Hardware emulation | Vision Thing |

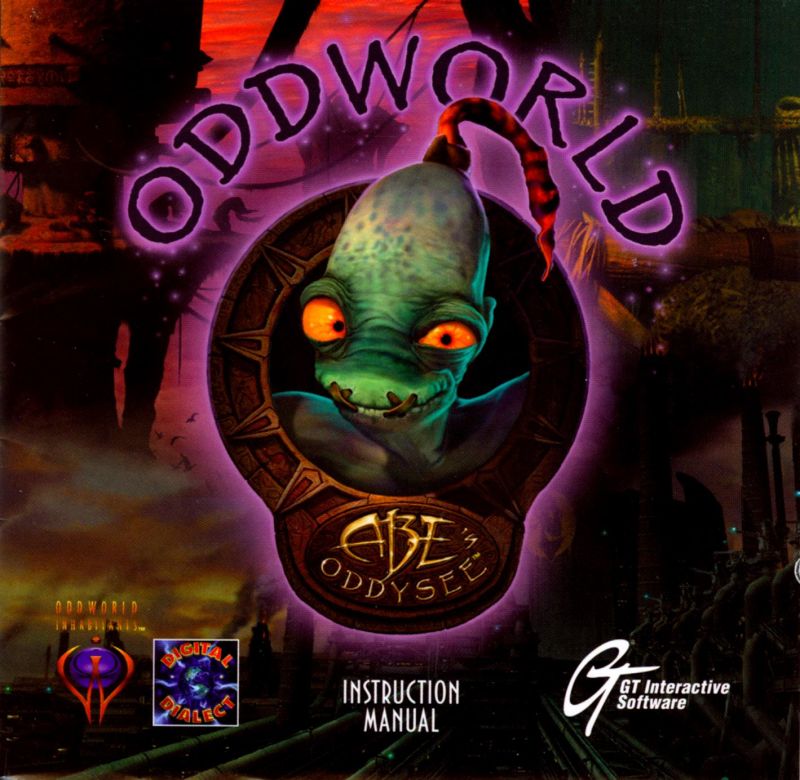

| 14 | 1997 | Abe's Oddysee | PC game | Digital Dialect |

| 15 | 1996 | Battle Arena Toshinden | PC game | Digital Dialect |

| 16 | 1997 | RTMZ | Computer Graphics | Self |

| 17 | 1994 | Easy-CD Pro | Machintosh application | Tabasoft |

1. 2024 - AI Research & Development

- Financial market forecasting using Artificial Neural Networks

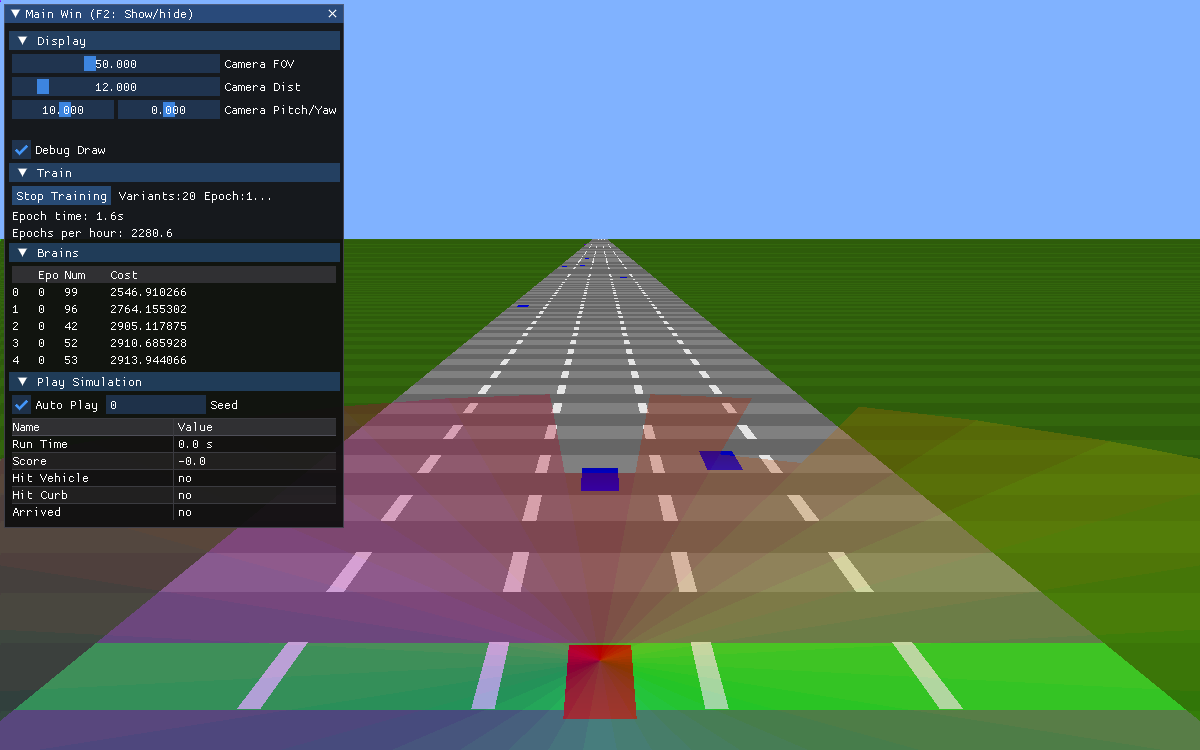

- Vehicle navigation: pathfinding and obstacle avoidance

- Custom C++ Neural Networks implementation (inference and training)

- Neural Networks with PyTorch using C++ and Python

- Genetic algorithms, neuro-evolution, REINFORCE-ES

Professional Journey

Below are the highlights of my involvement in AI and machine-learning at various stages of my career.

1) Simulation and AI

My journey in machine-learning started in 2017, when I started to design auto-pilot logic for the plane and missiles airframes in my experimental flight simulator XPSVR.

Simulation is a key component of machine-learning as it forms the basis for training at a much faster pace and a much reduced cost, especially when dealing with extremely expensive or dangerous tasks, such as flight and weapon delivery.

2) Algorithmic Trading and Neural Networks

In 2018 I started to apply basic ML to financial market forecasting for the ENZO Trading System.

By early 2023, my focus shifted towards Artificial Neural Networks, tackling increasingly complex tasks from autonomous driving to sophisticated time-series prediction models, now deployed in live trading.

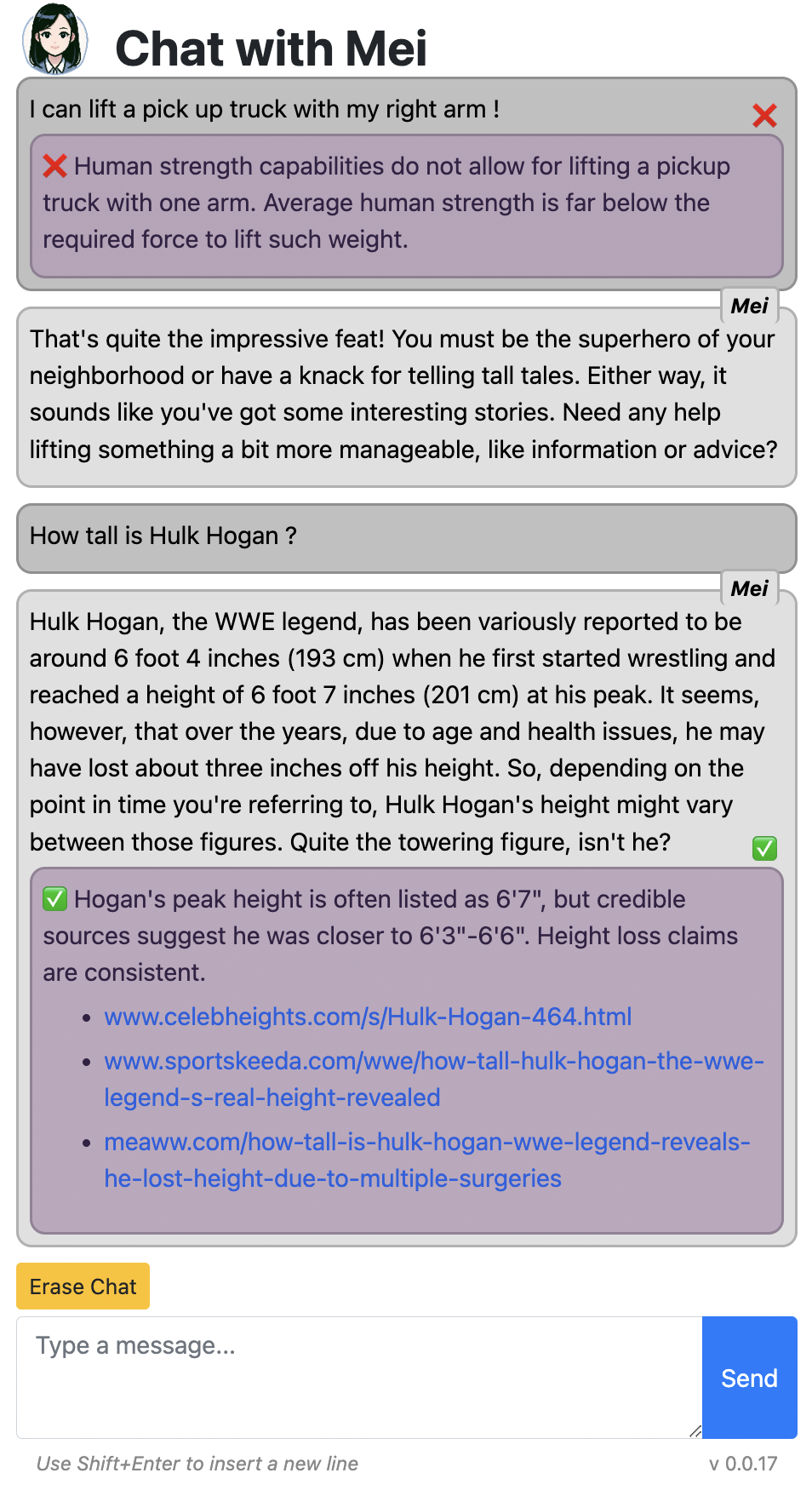

3) LLM-based Virtual Assistants and Agents

In late 2023, I developed ChatAI, a virtual assistant integrating OpenAI’s API that uses function-calling and implements a multi-agent architecture to enhance the user’s experience in terms of usefulness and reliability. Function-calling and prompt-injection are used to give the assistant a sense of location and time of the user.

Parallel agents are used to fact-check the conversation and provide additional information on the subject being discussed. This approach, while requiring additional token budget, addresses the hallucination issue that arises from naive use of an LLM. The increase in reliability for the user is ultimately worth the additional cost, as it saves time that the user would otherwise have to spend for manual verification or, worse, risk being completely misinformed.

The assistant operates as a web app, leveraging Flask, Bootstrap, and additional external modules for code syntax highlighting and LaTeX rendering, but at the core it’s a Python application that is adaptable to other platforms.

This is an ongoing project used both for deployment and internal research.

4) My Open Sourced AI Projects

See the sources of my other AI projects & experiments on GitHub.

Insights Gained

Building a system from simple cases was essential in grasping the core concepts involved.

In time-series prediction, Evolution Strategies (ES) proved effective, particularly with limited computational resources. While backpropagation is generally more efficient and likely a better solution long-term, the evolutionary approach facilitated a better balance between exploration and exploitation. This balance is crucial in chaotic environments like financial markets, where the potential for useful predictions is debatable and top-tier research often remains undisclosed due to its financial significance.

In my opinion, time-series prediction might be more challenging for backpropagation due to the chaotic nature of its task and loss-function, which must be carefully designed to avoid local minima and overfitting.

This research also highlighted the profound effects of training data, network architecture, hyper-parameters, and loss function design. Crafting a loss function is particularly nuanced in trading, where there isn’t an universal truth: many suitable outcomes exist, some more realistic than others, when it comes to training a model.

Technically, I honed my skills in using PyTorch both in Python and C++ (LibTorch), focusing on minimizing CPU-GPU data transfers, crucial for CPU-executed evolutionary strategies.

A key optimization was increasing the tensors’ dimensionality, reducing the number of batches needed for each generation’s processing. Neuro-evolution’s explorative nature demands evaluating numerous models, a process that can be computationally intensive without efficient parallelization.

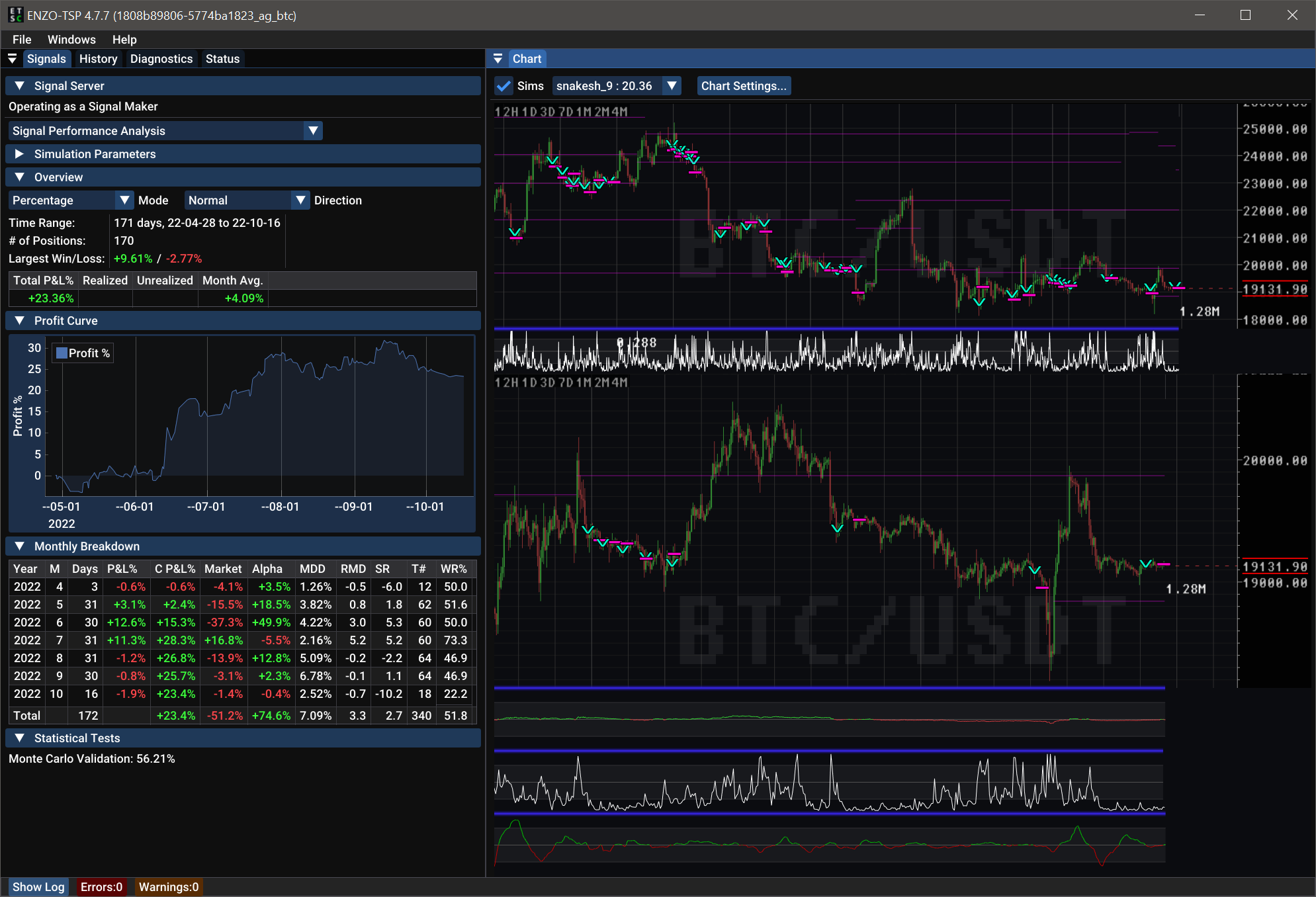

2. 2022 - ENZO-TS

A complete trading system for cryptocurrencies. See www.enzobot.com.

My work on the project

I developed most of the front-end and trading models in C++. Some of the major tasks are:

- Realized machine-learning driven trading algorithms and indicators

- Live trading on Bybit and Binance exchanges via REST and WebSocket APIs

- Backtesting infrastructure

- Simulation of exchange orders, including HFT (sub-minute) data

- Reporting of P&L, Calmar ratio, Sharpe ratio, Monte Carlo validation, etc.

- Risk management (see video Levels of risk management. Our approach summarized.)

- Live profit calculation and reporting

- TCP/IP client-server infrastructure to distribute trading signals to customers

- IPC protocol based on BSD sockets

- UI based on Dear ImGui

- Chart and indicators rendering in OpenGL

What I learned

- Challenges of building consistently profitable strategies

- Practical machine-learning application and techniques to reduce curve fitting

- REST API connectivity and crypto exchange protocols

- Risk and portfolio management techniques

- Leverage trading and short selling

- Usage of Dear ImGui for a complex project

- Reliance on multiprocessing for fault-tolerance

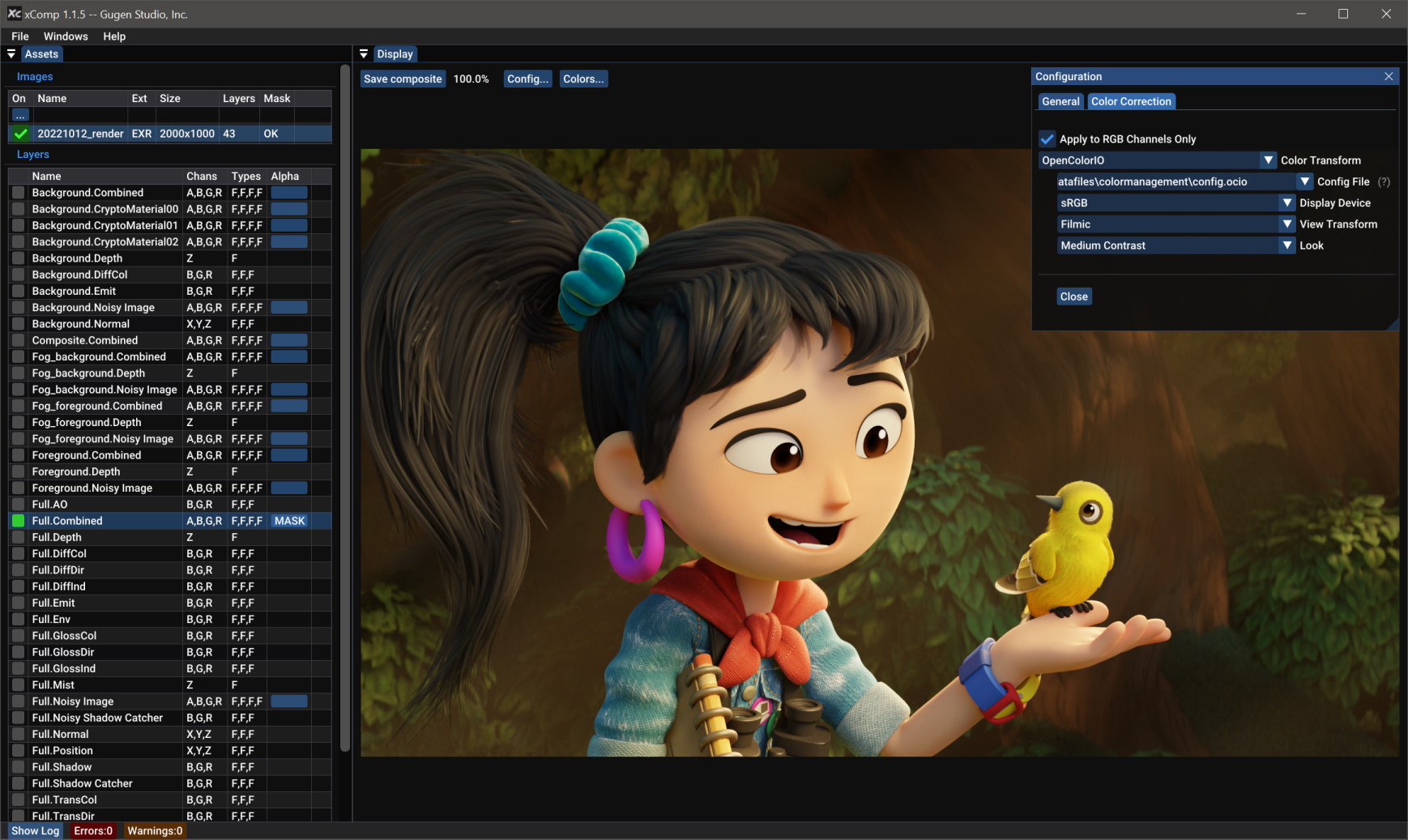

3. 2022 - xComp

An image viewer capable of building composites of a stack of images with transparent regions. Source code at github.com/gugenstudio/xComp

This tool was built to visualize region updates of a render on top of previous renders, in real-time. Images found in a chosen folder to scan for, are quickly composited with alpha blending in a stack. OpenColorIO transformations are optionally added to the composite image, which can be saved out as a PNG.

My work on the project

I was the sole developer on this project. The main tasks were:

- Setting up an multi-platform UI infrastructure with Dear ImGui and GLFW

- Implementation and use of OpenEXR and OpenColorIO

- Image compositing with alpha blending and resampling

- UI to manage EXR layers and masks

What I learned

- Build and usage of OpenEXR and OpenColorIO

4. 2022 - 3D for Melarossa app

A wasm package for the 3D display of a human figure (male and female) with morphing animation of vertices between different body weights and fat distributions. See the announcement (in Italian).

My work on the project

- Adapted my current C++ and OpenGL ES-based 3D engine for the job

- Added necessary system code and build scripts to compile and run via Emscripten

- Wrote a shader for deformation and blending to morph individual body sections at different BMIs

- Implemented Image Based Lighting (IBL) for added realism

- Implemented real-time shadow mapping, also working on mobile phones

What I learned

- Deployment and optimization of WebGL 1.0 and 2.0 via Emscripten

5. 2018 - XPSVR

An experimental flight simulator built to mimic the F-35 Lighting II jet fighter. The project targeted VR for full-immersion.

Avionics display includes the famous 2-screen touch display of the F-35 and its windowing system that allows to configure the panels and to maximize and minimize various elements.

Rendering is performed with the OYK Game’s rendering engine which I originally wrote for mobile games, and that I later expanded for PC GPUs and OpenGL 4.5.

See more details on the XPSVR’s blog.

My work on the project

I was the sole developer on this project. The main tasks were:

- Writing the game engine responsible based on OpenGL and OpenAL

- Physically Based shaders, IBL and CSM (Cascaded Shadow Maps)

- Underlying rigid-body physics engine

- Flight model for the airplane and missiles

- Autopilot for the airplane and the weapons

- VR support for Oculus

- Custom image compression and geometry LOD for the terrain

- GUI system, customized to simulate the F-35 look and feel

- Advanced avionics such as TSD (Tactical Situation Display) with moving cursor and DAS (Digital Aperture System), the see-through system

- Floating HUD simulating Head Mounted Display

What I learned

- Building a simple FDM (Flight Dynamics Model)

- Weapon systems and guidance (Missile Launch Envelope, Proportional Navigation, etc.)

- Building a basic autopilot to maintain orientation and altitude

- Building avionic displays

- The F-35 user interface

- Use of CSM for large terrains

6. 2012 - Fractal Combat X

An arcade flight combat game with more than 1M downloads.

Fractal Combat X (successor of Fractal Combat), is an arcade flight combat game for mobile phones that has reached a wide distribution across multiple markets around the world.

It’s built with and in-house game engine which I developed in C++ on top of OpenGL ES. The game featues a continuous terrain LOD capable of showing high detail at a very far distance with no percepible far clipping.

See the join technical presentation with Intel at GDC 2015, “Getting There First: OpenGL ES 3.1 and Intel Extensions in Fractal Combat X”

My work on the project

I was the sole developer on this project except for the Android platform. The main tasks were:

- Writing the underlying game engine

- Terrain LOD system with run-time light baking

- Implementation of OpenGL ES 3.1 tesselation, when available

- Dynamic skyline, with stratosphere and sun glare effects

- Dynamic destruction model for any 3D object

- Shadow mapping and IBL suitable for mobile

- VR support for Oculus GearVR, Google Cardboard or similar

- Custom image texture and audio compression

- Fractal terrain generation for certain stages

- 3D audio based on OpenAL

- GUI system, HUD

What I learned

- Terrain LOD and light baking on low-spec mobile systems

- OpenGL ES optimization for various mobile targets

- Use of in-app purchase and advertisement API calls

- Implementation of fun game controls for arcade flight combat games

- VR techniqes for playability and to improve comfort for the player

7. 2010 - Final Freeway 2R

An arcade driving game for mobile with over 1M downloads.

Final Freeway 2R (successor of Final Freeway), is an arcade racing game for mobile phones that has reached a wide distribution across multiple markets around the world.

It’s built with and in-house game engine which I developed in C++ on top of OpenGL ES. The game featues an unconventional presentation, common in the arcades of the 80s and early 90s.

My work on the project

I was the sole developer on this project except for the Android platform. The main tasks were:

- Writing the underlying game engine

- Writing the road model tuned to give a sense of speed and playability

- A simple stage definition format to simplify creation of new stages

- 3D audio based on OpenAL

- GUI system

What I learned

- Development on early iOS and Android NDK platforms

- Tuning for playability

- OpenGL ES optimization for various mobile targets

- API interfaces for various advertisement platforms

- Publishing and promotion of mobile games

8. 2010 - RibTools renderer

RibTools is an experimental open-source implementation of the basic Pixar’s RenderMan REYES rendering architecture. The parser is able to parse simple RenderMan rib scene and shaders which run on a custom VM. Rendering of the micropolygons is performed via SIMD CPU instructions.

Parallelism is implemented at all levels:

- Instruction level (adaptive N-way SIMD)

- Many-core hardware (multi-threading)

- Network-distributed rendering (custom protocol over TCP/IP)

See more details on: Implementing an experimental RenderMan compliant REYES renderer

My work on the project

I was the sole developer on this project. The main tasks were:

- Writing the rib scene parser

- RenderMan shader parser and compiler

- Implementation of a custom shader VM with SIMD opcodes

- Implementation of a REYES-style micropolygon renderer

- Flexible SIMD functions to write N-way parallel code

- Display and debugging infrastrcture with OpenGL and GLUT

- Server-client code for distributed rendering

What I learned

- REYES-style micropolygon rendering

- Writing a shader compiler and VM

- Writing N-way vector and thread parallelism

- Basics of implementation of network-distributed rendering

- Use of CMake for multiplatform builds

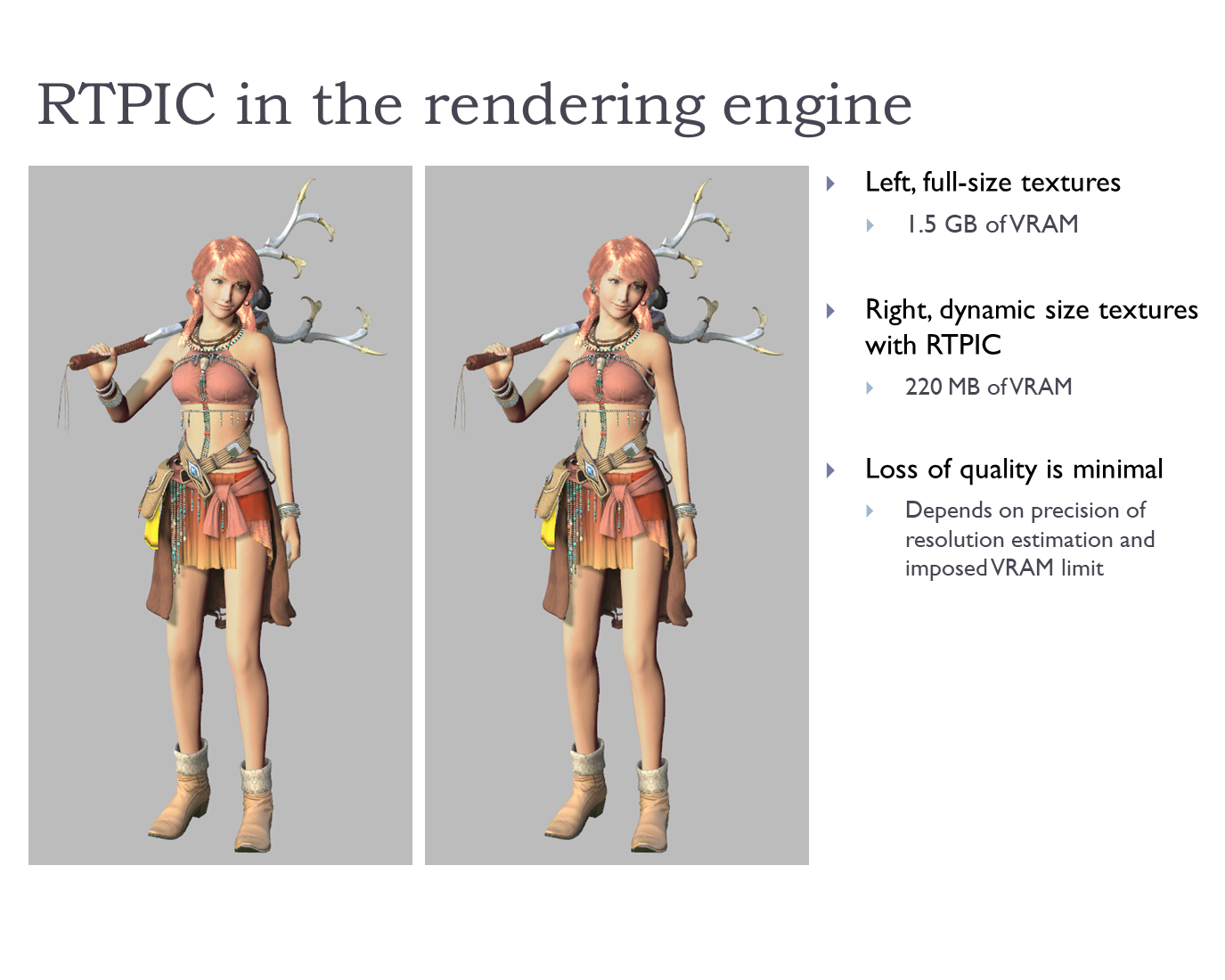

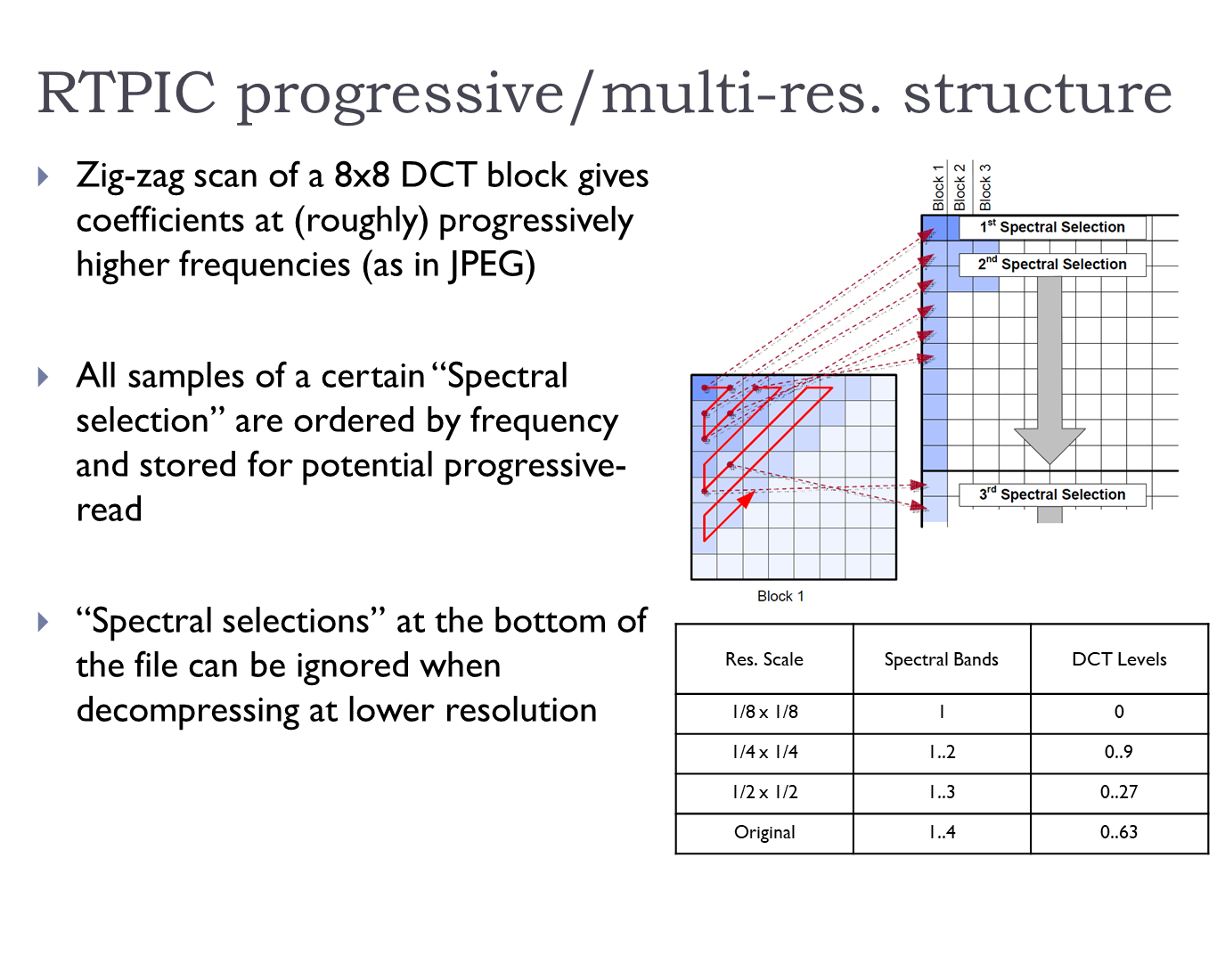

9. 2010 - GPGPU engine R&D

While employed at Square Enix, Japan, I lead the development of a next generation 3D engine capable of optimizing production rendering scenes and reproducing them in real-time with Direct3D and many-core hardware.

The development was built around production-rendering graphics assets for the cut scenes of the Final Fantasy XIII. Those cut scenes were originally meant to be played only as movie clips. Our job was that of playing back those same scenes in a real-time 3D engine, regardless of the complexity of the original assets.

The engine supported very high resolution geometry and multi-gigabyte textures as well as per-vertex animation. I wrote rendering and compression technology capable of reducing computing and storage for the target hardware. Rendering was performed with Direct3D 10. Compression used lossy approaches based on DCT, Wavelets and Zero-tree encoding.

My work on the project

I was the lead engineer on the runtime portion of the project. My main tasks were:

- Research & Develop new technology to handle massive production-rendering assets in real-time

- Writing the runtime rendering engine in Direct3D

- Writing custom GPGPU-friendly CODECs for texture and 3D geometry compression and streaming

- Working with hardware manufacturers to optimize graphics drivers

What I learned

- Multiprocessor and advanced SIMD architectures

- Maximizing performance around graphics drivers

- Managing and optimizing large 3D assets for production rendering

- Geometry compression techniques

- Workflow and assets for production rendering

- Team and project management

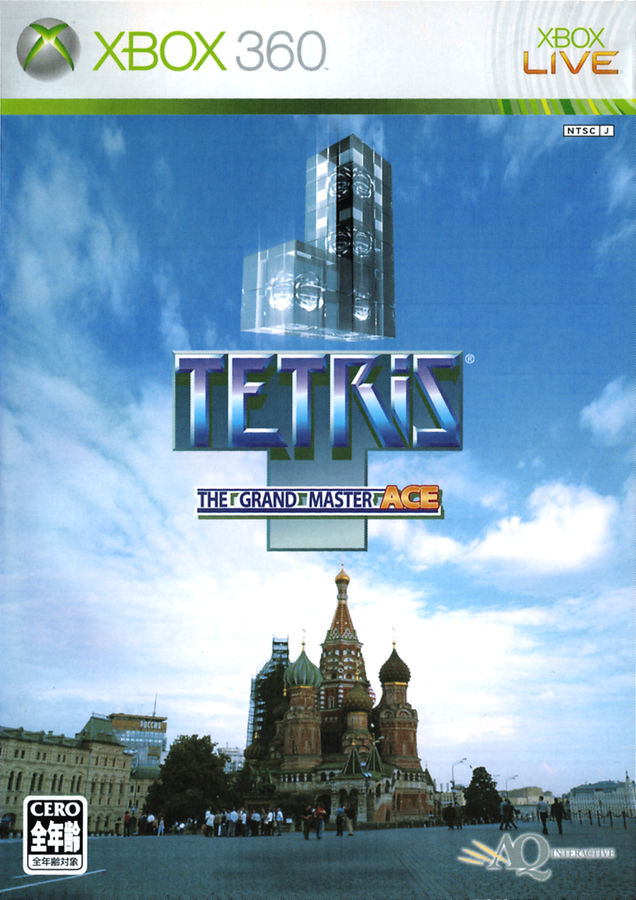

10. 2005 - Tetris: TGM Ace

While employed at Arika, Japan, I worked on “Tetris: The Grand Master Ace”, a launch title for Xbox 360 in Japan.

My involvement was mostly as a system programmer, taking care of the Xbox 360 platform including, rendering engine, network programming, audio engine. At the time we were working on an extra tight schedule to match the Xbox 360 release.

My work on the project

I was the senior engineer on the project. My main tasks were:

- Writing the game engine in DirectX for the Xbox 360 (graphics, audio I/O)

- PC version of the game engine for Development

- Display and particle effects

- Network code for multiplayer: lobby, VDU packets

- Created a low-level reliable-protocol on top of VDP

- Analyze and solve publishing requirements for the Live store

What I learned

- Xbox 360 platform

- Network multiplayer code with lobby system and UDP/VDP packets

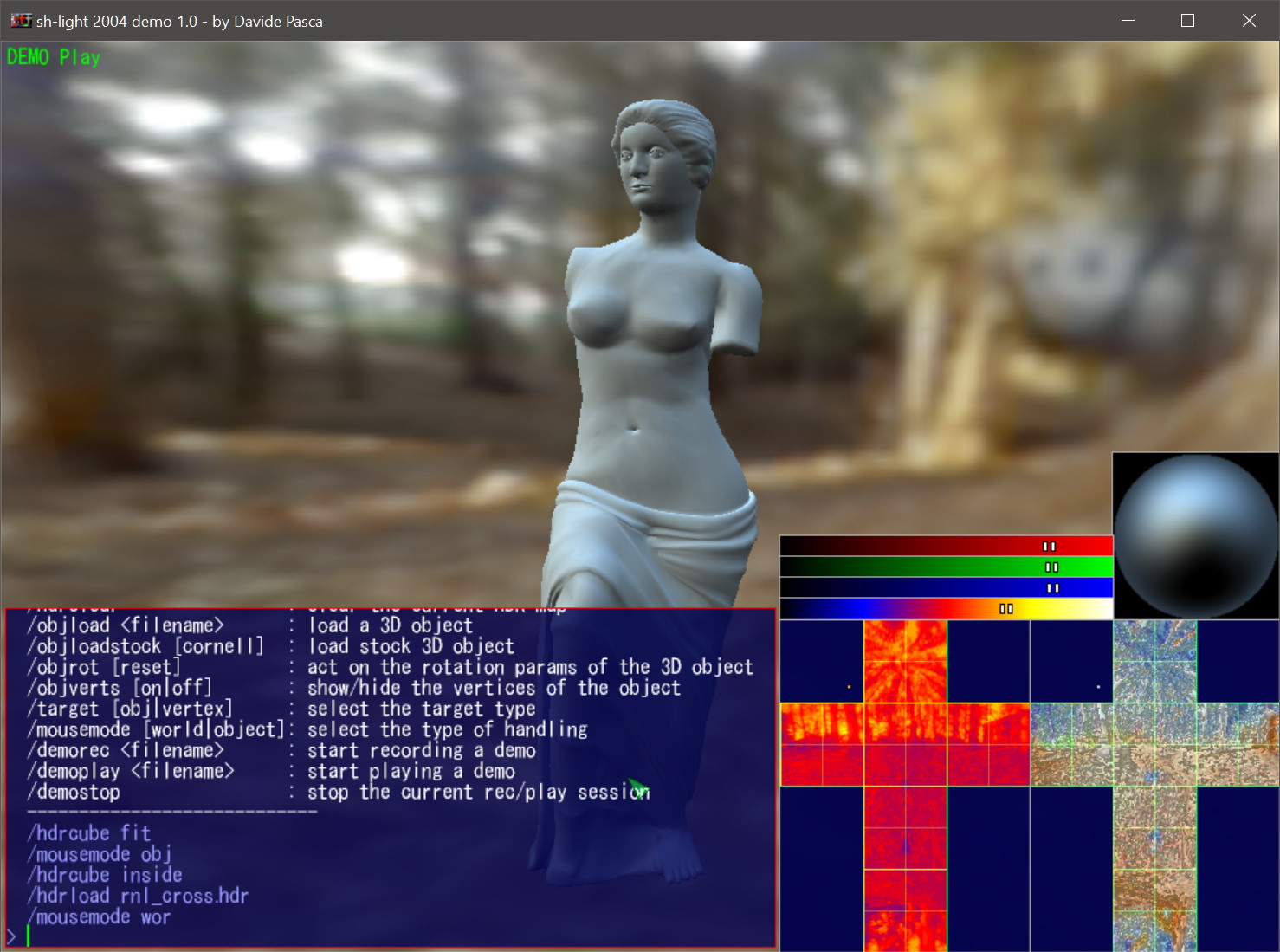

11. 2004 - SH-Light 2004

A real-time demo that uses Spherical Harmonics (SH) to quickly apply Image Based Lighting (IBL) for diffuse and ambient lighting.

The user can paint on a virtual cube map to freely apply lighting without the limitation of classical light sources.

The SH coefficients for the IBL are calculated on the CPU at every frame. The rendering is performed in OpenGL with a shader that calculates lighting of each vertex based on those SH coefficients.

This technique is based on the famous paper: “An Efficient Representation for Irradiance Environment Maps” by Ravi Ramamoorthi and Pat Hanrahan. I’ve since used Spherical Harmonics for diffused lighting or ambient lighting in every 3D engine that I wrote.

My work on the project

I was the sole developer on this project. The main tasks were:

- Implementation of the Spherical Harmonics coefficients on the CPU at every frame

- Shader that generates colors based on the SH coefficients

- A basic UI with an on-screen console and painting of the cube maps

- A system to record and replay events, for the demo mode

What I learned

- Application of Spherical Harmonics for lighting

12. 2000 - Final Fantasy VIII

While employed at SquareSoft, USA, I worked on the Windows port of the Final Fantasy VIII PlayStation game.

My main job was that of creating an abstraction layer between the PSX code and SquareSoft’s graphics library, which was based on DirectX. This drastically reduced porting time, as most code continued to compile as it were on the PlayStation.

Some code still needed fixing, in a few cases due to memory access bugs that did not appear on the original platform, due to lack of an MMU unit in the first PlayStation.

My work on the project

- Writing the abstraction layer that simulated PlayStation APIs and hardware on PC

- Alternative software rendering path for special effect, normally not possible via DirectX

- Adapt rendering code for the wild variety of graphics accelerators of the era

- Develop techniques to improve rendering quality with original (low res) assets

What I learned

- I built a deeper understanding of the PlayStation hardware

- Game code of Japanese RPG games

- Work in a mixed Japanese/international environment

13. 1999 - PSEmu Pro

In 1997 I started working on the PSEmu Pro, the first PlayStation emulator.

PSEmu Pro was possibly also the most consequential of the PSX emulators, due to its DLL plugin system that simplified the creation of more modern emulators, such as ePSXe.

Subsequent emulators could focus development on a subset of the hardware, while relying on existing plugins made for the PSEmu Pro, to support other key portions of the hardware such the GPU and the GTE.

My initial involvement was writing the GPU (Graphics Processing Unit) rendering emulation in software rendering. I eventually joined the other two original developers (Duddie and Tratax), with the Kazzuya nickname, forming the final core team.

Once in the team, I worked on anything necessary, most notably the GTE (Geometry Transformation Engine), and MDEC (Macroblock Decoder) emulation. Constantly balancing between performance and accuracy.

This was a non-commercial effort, done for the sake of the challenge itself. I worked on this in my spare time, coordinating the effort online via IRC (Internet Relay Chat).

My work on the project

- GPU software rendering emulation

- GTE emulation, used for 3D math

- MDEC emulation, used for video MJPEG-like decoding

- Gamepad controller plugin

What I learned

- Details about the PlayStation hardware

- Working with a fully-remote team

- Optimization techniques for fixed-point math operations

- Optimization techniques for video decoding

14. 1997 - Abe's Oddysee

In 1997 I worked on the PC port (Windows and MS-DOS) of the now famous Oddworld Abe’s: Oddysee.

The game was originally written for the Sony PlayStation, which at the time had specialized hardware capabilities unavailable to consumer PCs, requiring creative solutions to achieve the same results with just a CPU. Although the game didn’t use 3D graphics, it ran on a 640x240 resolution and 16-bit color. The PC version was required to run at 640x480 with the same color resolution, with no hardware acceleration, using CPUs of the caliber of a Pentium 120.

My approach was to continue to use a 640x240 base resolution, but upscaling it with a cheap but effective smoothing filter that was fast enough for the target CPUs.

I also wrote an MDEC (Macroblock Decoder) simulator, to playback the movie assets in the original PlayStation format. The clips were essential to the narration of the game and were sometimes mixed with the live animation.

Video decoding had specialized hardware on the PSX, but had to be done with the CPU on PC, requiring some special optimizations to maintain the necessary frame rate to decode the 320x240 video clips and upscale them to 640x480.

My work on the project

- Wrote a rasterizer capable of rendering the PlayStation graphics primitives with a CPU on PC

- MDEC simulation capable of playing native MJPEG PlayStation videos on PC

- Various tasks to adapt and port the original code

What I learned

- In-depth PlayStation’s GPU calls

- Optimization techniques for 16-bit color rendering on SVGA resolution

- MDEC CODEC definition and implementation

15. 1996 - Battle Arena Toshinden

In 1995 I worked on the PC port (MS-DOS) of Battle Arena Toshinden, a 3D fighting game and early PlayStation title.

For this port, we never received the full source code, so, much of the logic had to be written from scratch. My job was mostly writing the rendering engine, which had to run on regular Intel 486 and Pentium CPUs. I also wrote logic for the camera movement and some LAN network code (no Internet play yet !).

This was my first real job in the game industry, after a few years programming desktop software and developing 3D tech demos in my spare time (see RTMZ below).

A demo of the game is currently playable online at oldgames.sk !

My work on the project

- Wrote a software rasterizer for the 3D characters and environment

- Basic UI for options and main screen

- Logic for camera and controls

What I learned

- First steps into actual game development, albeit from a technical perspective

16. 1997 - RTMZ

RTMZ (previously RTMX) is a tech demo that I developed in the mid 90s.

The goal for the demo was that of showing off the real-time 3D rendering capabilities that I accumulated in years of personal R&D, as a teenager with a passion for computer graphics and games. Thankfully, this landed my first job in the game industry. Nothing sells like a cool demo.

In 2022 I released the old source code on GitHub for historical purposes.

The demo is written mostly in ‘C’ (C++ still had major performance issues at the time), and it ran on MS-DOS with VGA and SVGA resolutions on a 256 colors palette.

Major features are:

- Gouraud shading

- Texture mapping

- Basic lighting and material properties

- Z-Buffer (optional)

- Cool menu interface

My work on the project

- I wrote a rasterizer wih subpixel accuracy

- Implemented a materials system, within the limitations of 256 colors

- Wrote readers for OBJ, 3D Studio and Videoscape 3D formats

- Implemented a cool 3D UI and record+playback system for demo purposes

What I learned

- This was the testbed for my early 3D rendering

- Portions of my 3D library mimicked OpenGL calls, which at the time was not available on PC

17. 1994 - Easy-CD Pro

In 1993 I worked on the Mac (“Macintosh” at the time) edition of Easy-CD Pro, the first CD burning software for the Mac.

Although by the time I’ve been developing in ‘C’ on the Mac for a while, this was the first commerical product with a relatively wide reach that I’ve worked on.

This was also my first experience working with the English language with a native speaker, when the programmer for the ISO image engine (originally built on a Sun workstation !) came to Rome to help us integrate his engine into the product.

My work on the project

- I wrote the user interface, including a tree-view of the filesystem

- Implemented conversion of file names from Mac long format to ISO 9660

- Integrated the ISO image engine

- Various optimizations (file sorting. etc.)

What I learned

- CD burning software and formats

- Working in the context of an international team